Hello anons and cartoon avatars, Celt here to talk about serverless as it relates to cloud computing. Serverless has the potential to save enterprises significant time and money when applied to the correct use-case.

What is serverless

It would be challenging to find a word more hated among cloud engineers than serverless. Serverless is a term that was created salespeople and marketers. It directly targets legacy CTOs that want to never see or manage servers again.

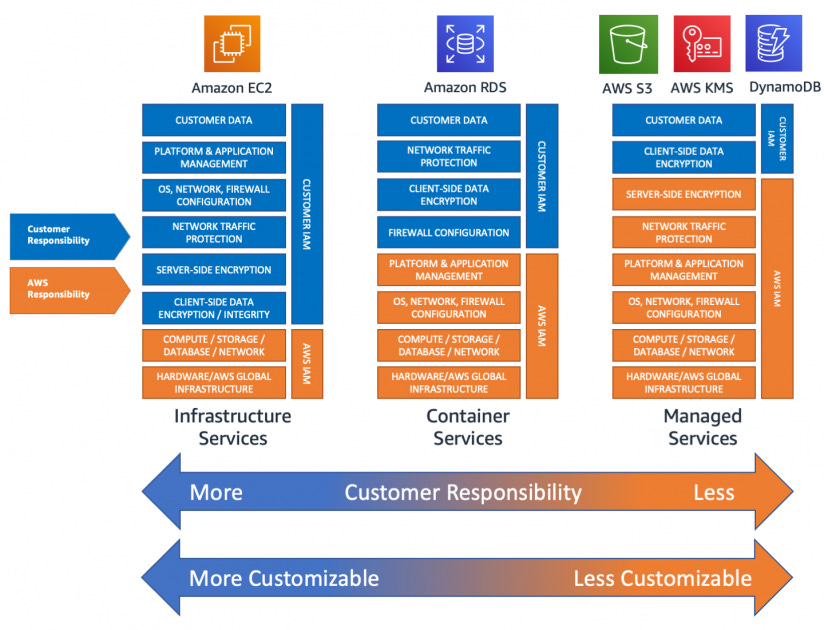

Serverless is the general concept that the cloud provider will completely manage the infrastructure, *which includes scaling.* The responsibility model would look like the following:

Notice that for managed services the customer is only responsible for the data, client side encryption, and Identity Access Management. AWS is managing the complicated parts.

The cloud providers are continuously innovating and improving these services on the customer’s behalf because they want to make the service better (obviously). So customers benefit from continuous improvement in the *without any increase in investment.* This simply did not occur in the on-prem paradigm unless there was significant investment into the servers and hardware.

Why Serverless?

Global Infrastructure

This point is generally part of Why Cloud Makes Sense. Specifically where this applies to serverless services, the cloud provider has already built the service globally and you can consume/build a *global* service with a couple clicks. Looking at DynamoDB, which is AWS managed NoSQL database service. Customers can consume a fully scalable, fault-tolerant, globally resilient, and secure NoSQL database. Running the database out of multiple regions around the world allows for resiliency and even better performance. How much would it cost to set up and scale this infrastructure without a cloud provider like AWS? Too much. Economies of scale allow cloud providers to set up massive datacenters around the world, and make services available to consume, which has many benefits to the customer.

Faster time to market

Simple fact here, the less time you spend setting up a globally resilient, scalable, distributed system means you have more time to spend on the business logic, which is what is driving your revenue. Iterating and prototyping quickly is very important in today’s landscape; speed kills. For example a start-up might throw together an MVP leveraging serverless services so they can invest all of their time into business logic rather than setting up a virtual server, OS patching, network infrastructure configuration, etc. The return on investment on business logic is simply higher.

Abstracted Infrastructure

As alluded to in the last section, the infrastructure is completely abstracted and managed by the cloud provider. This is powerful because companies no longer need to hire specialized talent with knowledge to manage the physical infrastructure. Generally, not managing infrastructure significantly reduces overhead, which means companies can reallocate investments on infrastructure to higher return activities like business logic, translating to a better bottom-line overall.

Turbo Note: If you’ve worked at a big company you know how much of a time sink managing infrastructure and maintenance can be. The overhead and time investment can drive companies to embrace serverless.

Automated Scaling

Serverless services are built to execute automated scaling with minimal configuration. It is hard to convey the power of being able to consume as much or as little compute power, when you want, without experiencing how difficult this would be in the on-premise paradigm. During the on-prem paradigm it was not unusual to have 25% or 50% unused compute power as excess capacity. Customers have access to a virtually unlimited amount of amount of compute power, storage, etc available at a moments notice. The flip side is also true too, serverless services can also scale down automatically. Great for applications that do not see significant traffic outside of business hours, operate in a single time zone, or use-cases like that. For example, your local small business restaurant, they probably are not getting much traffic from 1AM-8AM local. So why pay for dedicated compute power that is idle. Use serverless and scale down automatically.

Turbo Note: Yes you can scale down with dedicated compute, but there is a floor limit of 1. Serverless the floor is 0, because its pay for what you *actually* use.

Pay For What You Use

Due to the automated scaling, you pay for *only* what you consume. With dedicated compute in the form of a virtual server or EC2, you are paying for dedicated compute power. For serverless you are paying for essentially the execution time. When the serverless workflow is done and utilization returns to 0, you are not billed and longer. For dedicated compute you would have to turn off the instance to achieve a similar billing structure, which presents issues. Paying for only the compute power you actually use on-demand is a new concept, as part of Function-as-a-service. The idea is less than 10 years old from when AWS introduced Lambda Functions in 2014. This paradigm did not exist on-prem, which was buy a server as a CapEx and pay for the server to be running 24/7 regardless of excess capacity. More on this here: Why Cloud Makes Sense

Turbo Note: This cost structure alone can decrease costs by 50% or more depending on the use case. Not paying for idle time/capacity is that significant.

When Does Serverless Not Make Sense?

When you have a constantly running and utilized application with highly predictable traffic. This makes the cost structure for serverless slightly less attractive. Depends on the specifics but something to be aware of.

When the overhead for managing infrastructure is not high (rare)

vendor lock-in, essentially your application can be designed a certain way due to how the specific serverless service works, since you are just consuming it. This means if you designed the application a certain way to work with S3 or Lambda functions maybe it does not work the same with with Azure, Remember The Future is Multi-Cloud and we generally want to reduce vendor lock-in.

Decreased observability/traceability. Due to the high levels of abstraction you do not have much visibility under the hood so to say. This can create issues with troubleshooting, debugging, and tracing.

Lack of control. Closely related to the last bullet, but since the infrastructure is abstracted, you have no control to the low-level of the tech stack. This can create issues for some use-cases. If you have a need for an OS level optimization or dependency, then you cant use a serverless service.

Summary

We are seeing firms adopt serverless services because they provide an attractive cost structure to where you only pay for what you actually use, frictionless scaling to meet customer demand, and abstracted infrastructure so no infrastructure maintenance or overhead. Remember the time and investment that was going towards infrastructure can now be allocated on improve the business logic, which is higher return.

Thanks for reading anons,

-Celt